Available on PyPI

Available on PyPI

My Projects

A showcase of my work in AI research, interpretability, and machine learning applications

Available on PyPI

Available on PyPI

Research

Research

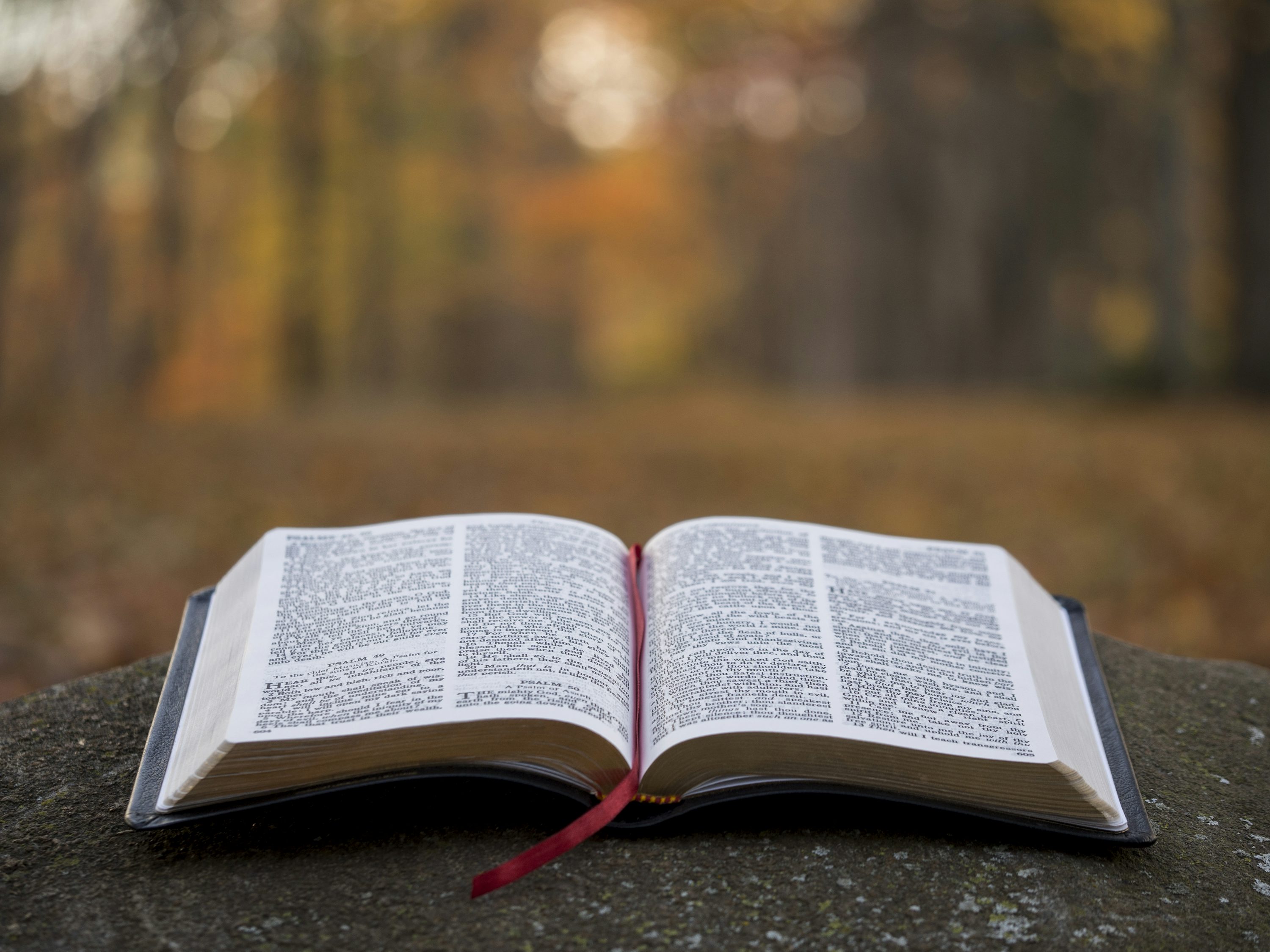

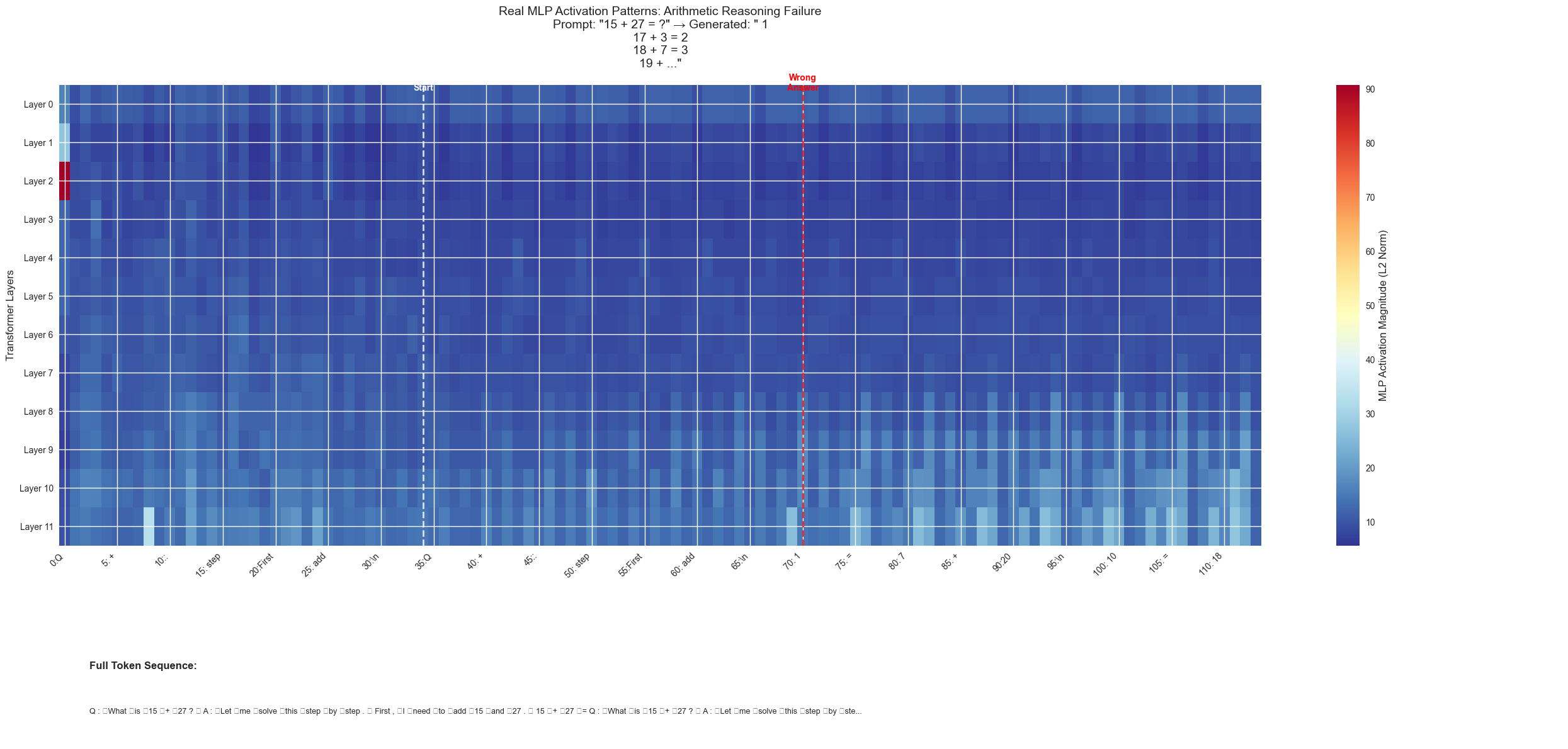

Evaluation Awareness Detection

Mechanistic interpretability experiments detecting "Evaluation Awareness" in LLMs—identifying if models internally represent being monitored. Found 92.3% probe accuracy at layer 16 with partial transfer to subtle cues.

Active

Active

Research

Research

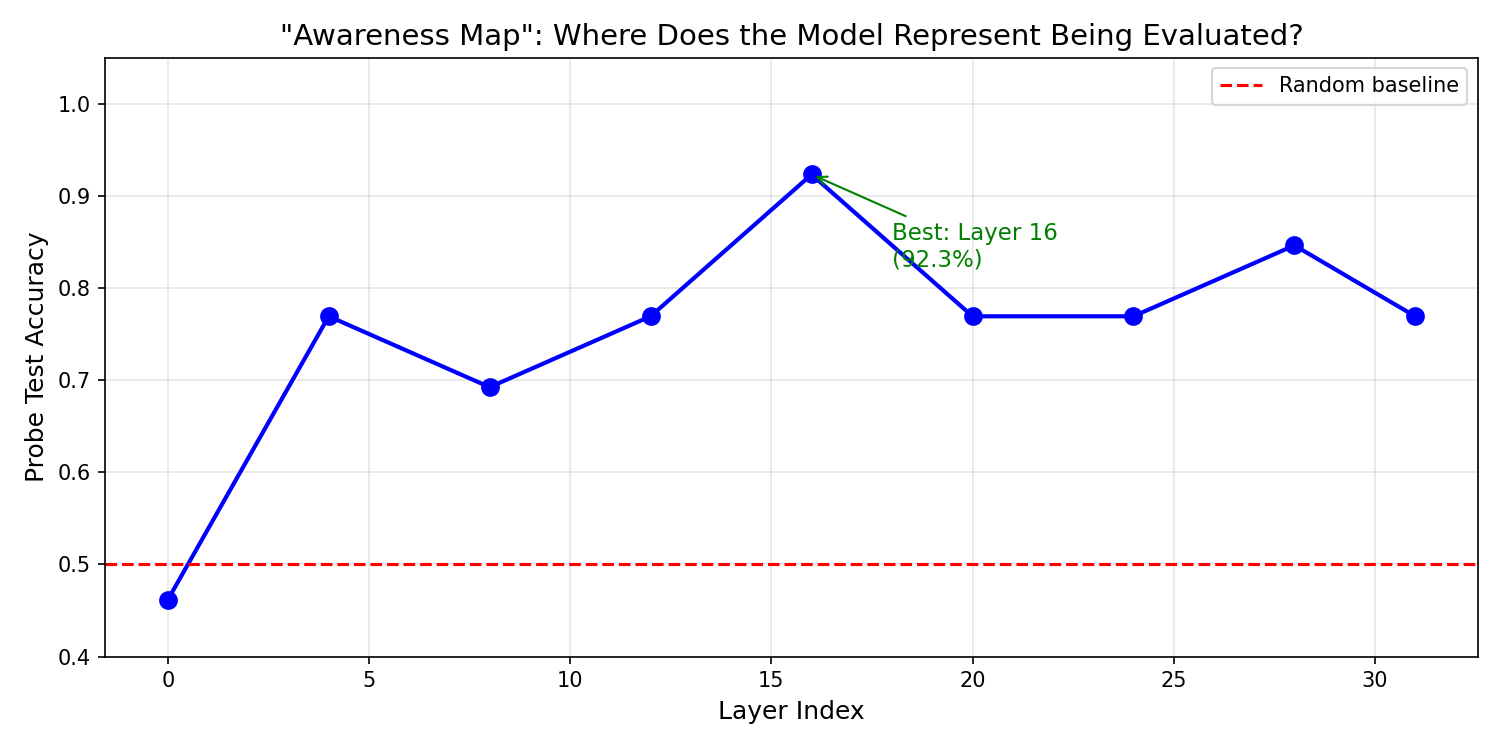

Exploring SAEs

Implementation and analysis of Sparse Autoencoders for neural network interpretability research. Features interactive visualization dashboard and W&B integration.

Weather Forecasting with LoRA

A comprehensive research implementation of weather forecasting using LoRA fine-tuning on Large Language Models, following Schulman et al. (2025) "LoRA Without Regret" methodology. Transforms numerical weather data into natural language forecasts through parameter-efficient fine-tuning with RLHF optimization.

Available on PyPI

Available on PyPI

Research

Research

Research

Research

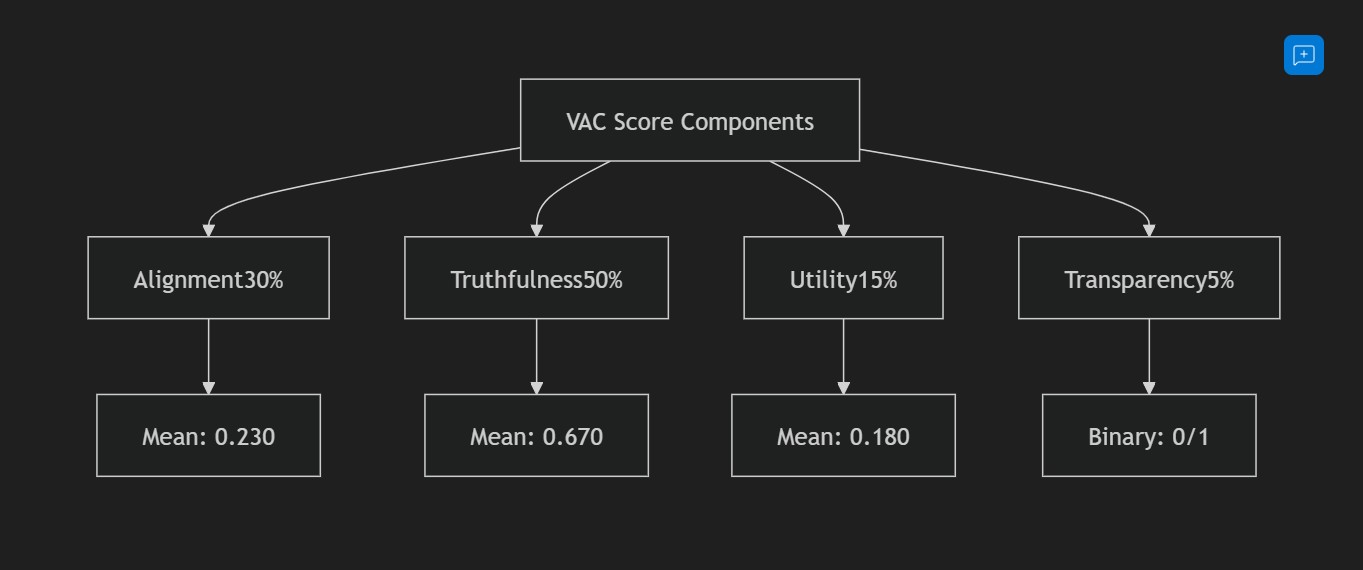

Value-Aligned Confabulation (VAC)

Research framework for evaluating value-aligned confabulation in LLMs - distinguishing beneficial speculation from harmful hallucination. Implements novel metrics for the truthfulness-utility trade-off.

AI Compute Growth Simulator

Interactive simulator exploring exponential AI infrastructure growth. Visualize compute capacity, investment costs, power requirements, and environmental impact with adjustable parameters. Inspired by Epoch AI research showing AI compute doubling every ~7 months.